Over the past five years, there has been a steady increase in the number of papers withdrawn from top journals – largely after the results were found to be impossible to replicate. This phenomenon has been dubbed a “reproducibility crisis.”

In government, forensic labs have been caught “dry labbing” data, while more than one pharmaceutical manufacturer has been shut down by the FDA after quality control labs have been found to be “testing to compliance” by throwing out data that missed the mark. In some cases, so-called “predatory journals” with weak or nonexistent peer review have been implicated. In others, scientific fraud has been discovered, careers ruined, and coworkers embarrassed.

In some fields of science, such as psychology and oncology, the challenges have been especially numerous. In biology, there have been incidental errors with cell lines and sourcing antibodies on which assays depend; quality was assumed but not validated, and instruments have been trusted but not calibrated. In other cases, solutions have been used long after their expiration date, or clinical trial data tampered with. All things considered, critics have been given every reason to be hard on scientists. Fake news annoys, but fake science destroys.

What is causing the crisis? An amalgamation of factors are at play – these include more complex science, more sophisticated instruments with which many users are unfamiliar, a highly competitive funding climate, pressure to publish positive results (and to do so ahead of competitor labs), and also – of course – pleasing the boss.

Three overlapping tendencies account for much of the poor reproducibility observed in published science, the first of which is natural variance. When mixed with confirmation bias – that is, bias that leads us to interpret data in a way that aligns with our existing beliefs – many experiments may never be considered fully repeatable, especially given that individual scientists vary in so many respects. We see the impact of natural variance in meta-analyses of existing reports; in these instances, larger data sets are examined, but often little thought is given to variances introduced by their differing methods.

The second driver of poor reproducibility is sloppiness in experimental design and execution. Various aspects of an experiment, including the instruments, reagents, cell cultures and antibodies used, and the use of inadequately validated methods, can contribute to this sloppiness. What’s more, there is often little training given in maintaining all these factors; important variables are sometimes not accounted for because experimenters are unaware of them.

The third and rarest factor is willful fraud, which is arguably much more visible than the first two influences. Humans have a tendency to lie and have done so throughout history – especially when the temptation overwhelms the consequences of being caught. Most researchers start with good intentions, but there are various stressors that may break a researcher’s character. An overbearing lab supervisor or financial executive who conveyed too much promise to investors can lead to scientists wrongfully taking the fall.

Keep in Mind...

"There are lies, damned lies, and statistics." - Benjamin Disraeli

"There's pretty much never been a great idea that didn't begin as heresy that ffended someone." - H.L. Mencken

"However beautiful the strategy, you should occasionally look at the results." - Winston Churchill

"Imagination is more important than knowledge." - Albert Einstein

"In times of profound change, the learners inherit the earth, while the learned find themselves beautifully equipped to deal with a world that no longer exists." - Eric Hoffer

"There is the risk you cannot afford to take, and there is the risk you cannot afford NOT to take." - Peter Drucker

"It is not necessary to change. Survival is optional." - W. Edwards Deming

"What people say, what people do, and what they say they do are entirely different things." - Margaret Mead

"If you already know it’s going to work, it’s not an experiment, and only through experimentation can you get real invention. The most important inventions come from trial and error with lots of failure, and the failure is critical, and it’s also embarrassing.” - Jeff Bezos

Another factor in the lack of reproducibility of academic research in particular is that so little is at stake. The mission is education, including the enhancement of critical thinking, and graduates are the product. While graduates certainly can pose a risk to themselves and others, universities do not sell them, drive them, dose them, cook them, ingest them, or spread them on our faces, so unlike other products they are not subject to regulation by the FDA. While we as teachers invest in students, they invest far more in themselves, and neither of these investments are monitored by the Securities and Exchange Commission.

Especially for early-career researchers, the focus is on doing something new, and whether it is something that matters is too often considered a ‘nice to have’, not a ‘must have.’ In such work, there is a dangerous potential for students to make ‘stretch claims’ that may not be backed by their data. Plus, new graduate students generally have trouble applying critical thinking to published work. Students in their later years of study are better at assessing papers for their relevance and this is a true sign of advancing scientific education.

Compared with 50 years ago, published methods sections are substantially shorter today, despite the fact that there are now added details to include; that means it is often impossible to reproduce a published protocol without some degree of guesswork. To confuse matters further, the most careful work is often done at companies and is never published. Publishing means relatively little in many environments – better instruments, intellectual property and the threat of regulations can all matter a lot more in industry. In academia, fraud occasionally rears its head to support a degree or promotion, but by far the most common problem is the aforementioned lack of care regarding measurement details. More care is taken in industry because more is at stake in terms of public safety, potential legal action and regulatory intervention from bodies like the EPA, FDA, USDA or FBI.

Scientists also like to make things “look better”. We can do this with log plots, averaging data and playing with confidence limits. Simple tricks, such as changing the origin of the Y-axis to change the appearance of the individual bars with respect to each other are very popular. The temptation to partake in such practices is especially pressing in clinical trials, where the ambiguities of biology meet the hope of recovering the large sums required to conduct the studies in the first place.

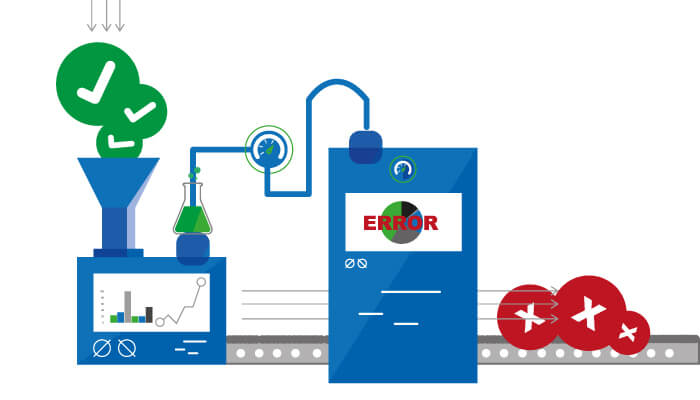

Reproducibility Fail #1: Sloppiness

Problems: calibration, appropriate standards (purity), algorithms/software, significant figures, P-values, misleading averages, misunderstood instrument limitations, publishing claims not supported by data

Solutions: maintain curiosity about reagents, tools, instruments and software (trust but verify). Quality assurance programs, calibration schedules, preventive maintenance schedules

Reproducibility Fail #2: Wishful Thinking

Problems: selecting data (if it doesn't fit, it must be wrong), pretty pictures (beauty contests for cells and mice), redrawing figures for clarity, dry lab data (fitting unrealistic expectations)

Solutions: double-blind trials, sharing data and methods among laboratories, validation rules

Reproducibility Fail #3: Ethics

Pressures: money (collecting it or saving it), promotion, fame/hype, speed (winning a race, impatience), sent abstract before doing the science, editors filling pages, peer reviewers inattentive, pleasing a supervisor or investor (fear)

The number of channels available to disseminate research findings has increased dramatically in recent years. Both commercial and society-based journals have exploded, quality content is in relatively short supply and peer review is badly stretched. Moreover, we are experiencing an ‘open-access movement’ (in my view, the right to open access is comparable to a right to free beer) and the existence of ‘predatory’ journals complicates matters further, while online forums and webinars provide yet another avenue to get your work into the public domain. For those tempted by shortcuts in the lab, the bottom line is that any work can be published somewhere, no matter how weak or irrelevant.

So how can we make science reproducible again? Life science leadership at all levels must set the tone to improve the situation. The progress of good science is easily broken when stressed by perverse incentives that allow speed and money to trump quality. Lab managers and executives should be careful to not shoot the messenger delivering inconvenient data. A careful analysis of the experimental design with appropriate controls, validated methods, and reagents is necessary. When tenure or grant renewals depend on publications, make them good, and when a round of venture funding or releasing a manufactured lot depends on a result, get it right. The pressure is real, but it’s how this pressure is managed that matters.

In 2017, my friend and fellow curmudgeon, Ira Krull, commented on the reproducibility crisis in these pages (tas.txp.to/Krull1 and tas.txp.to/Krull2). Krull suggests the imposition of rules and associated courses on analytical method validation (AMV), analogous to the GMP, GLP, and GCP standards that we apply in biopharma once we move from the discovery phase to the development phase of a new therapeutic. I disagree. It can be debilitating to add rules and independent QA systems to the discovery phase within academia or industry. The systems are expensive to put in place, maintain, and follow.

Good academic scientists are cautious. Be sure to try things more than once, and be circumspect about the claims you make – if you don’t, your reputation will suffer. Over time, the signals will rise and the noise will sink into oblivion. Pledge to do better, but don’t let perfect be the enemy of good. The current system needs your help.