My interest in “watery” pursuits can be traced back to my youth and long summers on the eastern end of Long Island, New York, where there is a remarkably beautiful nexus of bays, creeks, canals and the Atlantic Ocean – all only 70 miles from New York City. I began SCUBA diving when I was 12 years old and have been diving ever since, both for research and for pleasure. I became determined to study the ocean and found ways to use my love of diving as a research tool in both college and graduate school. I also vowed to make my vocation and avocation one and the same – as the saying goes: “If you do what you love, you will never work a day in your life.” I have been reasonably successful in my vow and had the chance to be involved in some great projects and initiatives, including groundbreaking work in ocean thermal-energy conversion, an ocean-based solar energy process, and building undersea telecommunications systems that span the globe. I am now responsible for helping scientists and engineers gain access to the sea with ships and submersibles for one of the top oceanographic research institutions in the world.

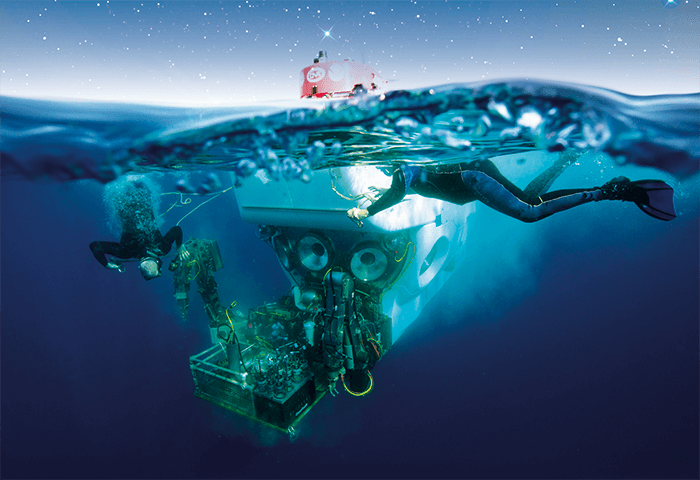

The noted oceanographer Sylvia Earle said, “The most valuable thing we extract from the ocean is our existence.” The seas occupy 71 percent of the world’s surface – and the more we study them, the more apparent it is how little we know. Indeed, any expedition is capable of discovering a new species! The area of the seafloor surveyed with low-resolution sonars – basic bathymetry – is less than 15 percent. And less than 0.05 percent has been surveyed with high-resolution robotic autonomous undersea vehicles (AUV). Human-occupied submersibles have observed less than the area of the city of London (2.9 km2). Moreover, there are vast swathes of the ocean that have not been sampled directly. We have only been to the “hadal” zone (depths greater than 6000 meters and an aggregate area larger than North America) a handful of times. And there are entire areas of the Pacific that have no oceanographic observations other than from satellites. The ocean is incredibly complex, with perturbations that lead to unexpected and dramatic impacts elsewhere. Little is understood of the unique ecosystems found at hydrothermal vents, natural seeps, and brine pools, which cause oceanographers to rethink conventional wisdom, including the origin of life on the planet. The ocean is also changing. So we need both a baseline of knowledge and ways to measure that change and provide the public and policy-makers with the data necessary to make informed decisions. Oceanography and ocean engineering are exciting professions and our tools – high-tech ships, submersibles – combined with far-flung destinations would seem to be the perfect recipe for great public awareness and support. However, the public is generally under-informed about ocean issues. Perhaps this fact partially explains why oceanography is the stepchild to the space program (see page 34) in terms of priority and funding for research. Annual funding for oceanographic research in the US is about $1 billion – less than seven percent of NASA’s budget. In light of the pressing ocean issues, this funding inequality needs to change – in a relative hurry.

Basic or applied?

It is true to say that oceanographers engage in basic research topics that interest them and that will attract funding. However, the roles of basic and applied research are actually on a continuum. Francis Bacon believed that true scientists should be like bees – extracting goodness from nature and using it to do useful things. Such a dynamic tends to focus research on societally important questions and creates a convergence towards the big issues of the day, such as climate change, sustainable fisheries, ocean acidification, pollution, natural hazards (earthquakes, for example), safe resource extraction practices, and delineating and understanding territorial seas and borders. Technology is also critical, and oceanographers routinely collaborate with engineers to develop the tools necessary to do their work. The harsh ocean environment presents unique challenges to access, sampling, and data collection, so the convergence of science and engineering is critical to success. Oceanography also has a history of basic research, which has produced opportunistic breakthroughs and advances, enabling unexpected and important applications. A great example, which also demonstrates the science-engineering convergence, is the application of scientific methods and technologies developed to study hydrothermal vents to the Deepwater Horizon oil spill, including measurement of the oil-spill flow rate, the sampling of the effluent at the blowout preventer, and the mapping of a deepwater hydrocarbon plume. In that case, the fruits of basic science and research responded to meet an important societal need.Ocean scientists and engineers who are engaged in basic research tend to refrain from involvement in advocacy, which ensures an unbiased and agnostic approach to questions – and maintains the integrity of the science. That said, the data obtained – and the outcomes achieved – are publically available for advocates and interest groups to cite for or against a particular position.

Data collection challenge

Key challenges to oceanography are the spatial and temporal domains. The ocean is vast and we have relatively few means of access and little persistence. Research vessels and underwater vehicles go out and scientists learn something about specific locations for the period of time they are there. Fixed “ocean observing systems” provide an extended time series of data but at one location. Unlike our pervasive weather systems on land, there are very few ocean observing systems, so the spatial coverage is very limited. Remote sensing systems, such as satellites, help to address the spatial and temporal problem, but paint an incomplete, lower-resolution picture. Oceanography brings together biology, chemistry, physics, and geology – and many areas of specialization within those disciplines. As such, the tools of the oceanographer are the tools of the scientist – although sometimes adapted for the harsh marine environment. Marine microbiologists need microscopes and chemical oceanographers need mass spectrometers. Oceanography presents a unique set of problems, however, because of the difficulties of access, remoteness, expense and risk. Samples collected at depth may change or disintegrate when brought to the surface. Also, samples collected aboard ship or a remote field site might be stored for weeks or months and may require special handling. This lag time prior to analysis may affect the quality of the results, so the traditional solution is to perform as much analysis as possible in the shipboard laboratory. Indeed, today’s research vessels have well-equipped labs, so a lot can be done shortly after collection. The trend, however, is for oceanographers and engineers to find ways to make more measurements in situ. And the Holy Grail is to connect in-situ measurements via communication path to the ship or even ashore to obtain results in real time. Fortunately, many of the most basic and important measurements in oceanography can be obtained accurately, as long as you can get there. These include temperature, salinity, pH, dissolved oxygen concentration, and fluorescence, among others. These are parameters important to all oceanographers and are routinely collected using sensors deployed from a ship, secured to a fixed mooring, or strapped to a submersible, regardless of any other more complex sampling objective. Growing use of robotic autonomous undersea vehicles (AUVs) and tethered remotely operated vehicles (ROVs) has caused sensor design to evolve to meet the stringent form factor, weight and power constraints of these unmanned submersibles.What we measure and how we do it

Excess carbon dioxide is now a recognized pollutant and greenhouse gas. It is also absorbed by the ocean, which drives down pH and the saturation state of calcium carbonate, a process called ocean acidification (OA). Oceanographers are studying OA to understand the impacts on shellfish, plankton, and corals, whose skeletons are made of calcium carbonate. Key measurements of pH are made using in-situ sensors. Dissolved inorganic carbon (a proxy for calcium carbonate concentration) and pH are also determined by analyzing water samples with coulometric and potentiometric titrations, respectively. Hurricane frequency and intensity are active areas of oceanographic research. Marine geologists such as WHOI senior scientist Jeff Donnelly have determined that sediment deposits from historical hurricanes can be identified in cores taken from coastal marshes. Data from these deposits are used to reconstruct the impacts, determine patterns, and put recent hurricanes, such as Sandy, in the context of the last several millennia. A host of analytical tools is used to analyze the cores, including X-ray fluorescence scanning to determine elemental chemistry and the detection of stratigraphic markers, such as Cesium isotopes using high-resolution gamma detectors. Accelerator mass spectrometry (AMS) is used for radiocarbon dating of fossils. In fact, we operate the National Ocean Sciences Accelerator Mass Spectrometer (NOSAMS) facility at Woods Hole, which is a resource used by the entire oceanographic community.As noted, oceanographers also study hydrothermal vents. These fissures that discharge geothermally heated water were first discovered in 1977 and opened a new “sea” of inquiry into chemosynthesis in the marine environment. Vent sites have now been found all over the ocean, primarily at tectonic plate boundaries, hot spots, and fault sites. They are known for their chemical and biological complexity, high temperatures, and ephemeral nature. Oceanographers have developed ways to collect and analyze samples at vent sites and these have increasingly enabled better understanding of their character, variability – and importance. For example, WHOI chemist Jeff Seewald developed an isobaric gas tight (IGT) sampling device to collect fluids flowing from hydrothermal vents at hydrostatic seafloor pressures, thereby retaining the volatile species in situ concentrations for later analysis ashore. Once in the lab, more traditional techniques such as gas chromatography (GC) and isotope ratio MS could be employed after samples were separated into gas, seawater and other fluid constituents. Research on natural gas “seeps” and asphalt volcanoes led to the development of a mass spectrometer by WHOI researcher Rich Camilli that could be operated from AUVs, ROVs or human-occupied submersibles, providing the ability to identify targeted compounds in situ. Some of this pioneering work was done off Southern California, where there are significant natural flows of both oil and methane gas from seafloor features. Submersibles are used to map and create photo mosaics of feature topography, to sample solid mounds of asphalt for traditional laboratory analysis, and to make in situ measurements of methane (or other targeted compound) distributions using mass spectrometry. Such an assembly of tools and analytical techniques has helped to further our understanding of these naturally occurring point sources of hydrocarbon in seawater, which are now known to account for half of the overall input into seawater worldwide.

Plankton represents the bottom of the food chain in the ocean, so understanding its biology is critically important. The traditional method for studying plankton is by towing nets behind a ship for occasional recovery, extraction of the plankton, preservation, and study in the lab. An early step in the lab is to analyze samples under the microscope for species identification and diversity assessment. While these methods are informative, they are obviously limited by the length of tow, sample size and most importantly, the destructive aspects of the hydraulic forces on the delicate tissues of the species being studied. Over the last decade, new devices have been developed for imaging plankton in situ, in real time. In effect, the microscope now goes underwater, continuously images plankton, and provides data back to a computer that can count individuals, identify species, and archive the data. Some systems can be towed behind a ship (video plankton recorder; VPR), some can be mounted at a fixed location (FlowCytoBot), others can be fixed to a ship’s seawater system (continuous plankton imaging and classification system), and most recently others have been affixed to AUVs, dubbed PlankZooka and SUPR-REMUS. These devices take advantage of advances in imaging and computing power, since gigabytes of data can be generated. A VPR, for example, has been towed on record-breaking transits across the Atlantic and the Pacific, generating data that has changed our understanding of the concentrations of plankton in the ocean in both space and time.. With this technology, we now can detect blooms and water masses devoid of plankton that were never before identified, let alone understood, because of the “snapshot” nature of traditional methods.

WHOI is a nonprofit institution based on Cape Cod in Woods Hole, Massachusetts, USA. Founded in 1930 with a grant from the Rockefeller Foundation, WHOI is the largest independent institution in the world dedicated to the study of ocean science and technology. With an annual turnover of over US $220 million, funding is obtained primarily through grants from US government agencies (such as the National Science Foundation and the Office of Naval Research), private foundations, philanthropy and industry. WHOI scientists seek answers to important questions, both fundamental and applied, and are leaders in their fields. Unique to WHOI is the strong coupling between the basic sciences, engineering and seafaring. WHOI also has a joint graduate education program with the Massachusetts Institute of Technology and awards PhD degrees in all of the oceanographic disciplines and ocean engineering. To learn more about WHOI, visit: www.whoi.edu

Reacting to disasters

In 2010, the blowout of the Deepwater Horizon Macondo well was a call to action for oceanographers and ocean engineers. Many tools and techniques developed for basic research proved to have critically important applications and helped to measure the scale and impacts of the event. For example, the IGT sampler developed for hydrothermal vents was used to obtain the definitive sample of the effluent from the wellhead. The sample was analyzed to determine the gas/oil/seawater fractions and the composition of hydrocarbon gases and oil. Knowing the composition provided a means of correlating samples collected elsewhere in the Gulf of Mexico with the Macondo well, and helped scientists understand the fate of the oil in terms of transport and weathering. The underwater MS instrument developed to study natural seeps and asphalt volcanoes was used to identify and map (in 3D) the hydrocarbon plume that resulted from the continuous discharge of oil from the Macondo well. The MS was deployed both from a traditional “rosette” lowered by a wire from a research vessel and from an AUV. The MS was “tuned” to identify select hydrocarbon compounds, tracking 10 chemical parameters in real-time. In three missions, the AUV was able to confirm the presence of a plume at 1100 meters depth that trended southwest from the well for 35 km. Water samples collected using traditional methods from research vessels were analyzed in the laboratory for dispersants using ultra high-resolution MS and liquid chromatography (LC) with tandem MS. The concentration of dispersants in space and time proved to be an important and novel proxy for the movement of Macondo oil and gas, and were shown to be associated with the oil and gas in the plume mapped using the in-situ mass spectrometer. These examples, among many others, demonstrate the resourcefulness of oceanographers; without compromising scientific rigor, dozens of missions went out to sea in the weeks and months following the blowout to measure the relevant parameters, to better understand the impacts, and to help inform decision-makers.In the grand scheme of things analytical scientists working in oceanography aren’t worlds apart from their land-based peers; we share similar goals in serving science and society. Author and marine biologist John Steinbeck wrote in his Log from The Sea of Cortez, “We search for something that will seem like truth to us; we search for understanding; we search for that principle which lays us deeply into the pattern of all life; we search for the relations of things, one to another...” And so, the search in oceanography continues. There is much to be done and it starts with educating the public about the urgency of doing it. In the words of explorer and proto-environmentalist Alexander von Humboldt, “Weak minds complacently believe that in their own age humanity has reached the culminating point of intellectual progress, forgetting that by the internal connection existing among all natural phenomena, in proportion as we advance, the field traversed acquires additional extension, and that it is bounded by a horizon which incessantly recedes before the eyes of the enquirer.”