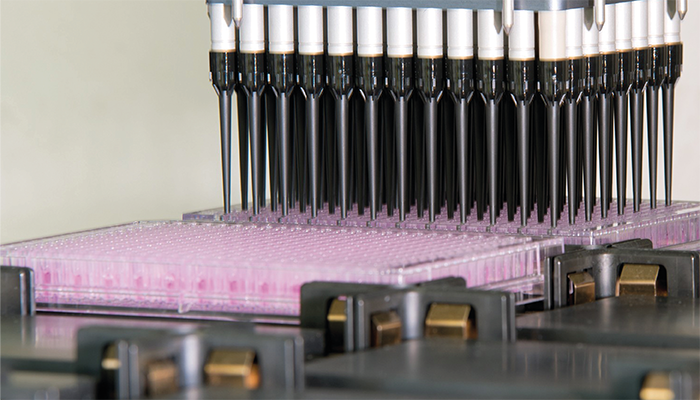

Automated pipetting platforms for the preparation and measurement of plasma samples and chemical mixtures in high-throughput bioassays at the UFZ.

Credit: Bodo Tiedemann

Naturally ingested chemicals that are usually ineffectual at low concentrations have the potential to form mixtures with others in the human body to produce neurotoxic effects, according to a recent study involving pregnant women.

A team from the Helmholtz Centre for Environmental Research (UFZ) used high-resolution mass spectrometry (HRMS), in addition to an in vitro assay, to determine the neurotoxic effects when environmental chemicals are combined in the human body. They discovered that certain chemicals could partner with others to produce neurotoxic effects on analytes, despite having no effect on their own.

The team extracted plasma samples from 624 pregnant women from the Leipzig mother-child cohort LiNA, using a nonselective extraction method for organic chemicals. They then used HRMS to test for 1,000 different chemicals that can be ingested by humans, which are naturally present in the environment, of which they were able to quantify around 300. From there they analyzed the neurotoxic effects of the individual chemicals, as well as around 80 self-produced chemical mixtures in realistic concentration ratios, using a prediction model to quantify the degree of neurotoxicity and a cellular bioassay (based on human cells) to then test their predictions.

To better understand the inspiration and implications of the findings, we reached out to first author Beate Escher for her thoughts on the study.

What was your main inspiration for this work?

After working on complex mixtures in the environment for many years now, we asked ourselves how they might transfer to the human body. With this in mind, we took the target screening analytical methods for wastewater and surface water and exchanged a fraction of the chemicals by suspected neurotoxicants.

Our team has a lot of experience with high-throughput toxicity testing of environmental samples and chemicals – physically mixing chemicals in the concentration ratios they’re detected in (environment or people), before testing in the same assay where the sample extracts and single chemicals are also assessed. Comparison of mixture effect models and effects allowed us to evaluate how chemicals act together in mixtures.

What was the biggest analytical challenge you faced during your research and how did you overcome it?

We encountered several challenges. First, we had to improve the extraction method in order to extract hydrophilic, hydrophobic, neutral, and charged chemicals. What you do not have in your extract you cannot detect, even with the finest method. As we combined chemical analysis with mixture effect assessment with bioassays, internal standards could not be added. In the end, we settled for a two-step extraction process that gave us the greatest recovery of 400 chemicals and also mixture effects. We then performed polymer extraction with silicone followed by solid-phase extraction.

Next we had to perform quantitative chemical analysis to measure and model mixture effects. Our goal was to capture up to 1000 chemicals, so we had to work with a target screening analysis using external standards. To make the chemical space as broad as possible, we used LC-HRMS and GC-HRMS in parallel and complemented the chemical analysis with the quantification of mixture effects using an in vitro bioassay. Finally, we had to develop and optimize an automatic script (data evaluation is a nightmare!).

We had many samples, which meant we needed to test the mixture effects with a bioassay that was simple, yet representative. Our bioassay is based on neuroblastoma cells that have been differentiated to form neurites and perform signal transduction – although it is an artificial test system, it mimics basic functions of neural cells. When using a more complex test system (or even animal studies), it isn’t possible to perform toxicity tests that produce reliable and reproducible results with >1000 samples (e.g. blood extracts, single chemicals, mixtures). For this reason we have evaluative in vitro test systems. The assay we are using is well-established in research, we simply applied it to high throughput. The idea was not to test health effects directly, but to capture mixtures of chemicals that cause a specific effect (in this case neurotoxicity).

Can you sum up the significance of your findings for our readers?

We found more and more diverse chemicals in human blood, ranging from legacy chemicals such as persistent organic pollutants, to more modern chemicals. Despite being present at low concentrations where they wouldn’t cause an effect on their own, all of them acted together in mixtures.

Ultimately, we were able to view the entire history of chemical production over the course of 100 years. From metabolites and long phased out persistent organic pollutants (POPs) such as dichlorodiphenyltrichloroethane (DDT), to modern chemicals, food contact material chemicals, personal care products, antioxidants, industrial chemicals, what’s found in the environment and the kitchen cabinet; you can find it if it is sufficiently persistent. Perfluorooctanoic acid (PFOA) was found in almost every sample. We couldn’t capture everything as our screening analysis was too coarse – our aim was to quantify new and old, persistent and degradable, to demonstrate the relevance of mixtures of seemingly unrelated compounds. We designed over 80 mixtures in concentration ratios as they were detected in individual humans, and in every case the in vitro effects added up.

What implications could your findings have for our understanding of chemical exposure risk, monitoring, and regulation?

Our findings clearly show that we cannot regulate on a chemical-by-chemical basis, and that complex mixtures must be addressed in risk assessment and regulation.