Calibration for an analyte using spectroscopic techniques requires a model: the mathematical relationship between the analyte concentration, for example, and the instrumental signal. Once a model is obtained, it can be used to predict future samples. A spectral calibration model can be obtained by univariate regression, if an appropriate sensor (for example, wavelength) can be identified, or by multivariate regression. The univariate model is based on the common least squares (LS) criterion, minimizing the sum of the squares of residuals or the degree of fit. This is the trendline command in Excel many are familiar with. It is also the same measure used in multivariate regression methods, such as partial least squares (PLS); however, with PLS, projections of the measured data are used instead of the actual measured data. An upshot of the PLS projections is that the size of the regression vector shrinks to lower the variance relative to the ordinary LS (OLS) solution. The regression vector magnitude depends on the number of latent variables (LVs) used in a projection, which can be considered the PLS discrete tuning parameter.

The multivariate penalty regression method known as ridge regression (RR) takes a different approach. In addition to minimizing the LS criterion, it also specifically minimizes a penalty on the size of the regression vector. The tuning parameter for RR is continuous and weights this penalty. A greater weight results in a smaller regression vector. Because of the large number of possible models to select from, RR has not seen the popularity that PLS has. However, there are now powerful fusion methods available that can select an acceptable RR model as well as a PLS model for a given set of calibration samples. Moving beyond RR, many other penalties of various types have recently been studied – and more are being actively proposed and investigated with different purposes in mind. With a variety of penalties, the regression model can be targeted to desirable solutions as well as away from undesirable solutions. Each penalty is accompanied by its respective tuning parameter that needs to be optimized. Penalties can also be tailored for specific purposes. An area seeing a large increase in novel uses of penalties is calibration maintenance (model updating or more generally, domain adaption) as seen by recent papers published by Google. In analytical chemistry, model updating generally means that a model has first been formed from a set of calibration samples based on an inherent set of primary measurement conditions (physical and chemical matrix effects, instrument, environment, and so on). What if we need the primary model to predict samples in new measurement conditions (secondary)? For example, a model may have been formed to predict the soil organic content for a specific geographic region or soil type using a handheld near-infrared spectrometer, but it may be necessary for the model to predict samples from a different geographic region – the secondary conditions or new domain. Such model update problems can be solved using penalty regression methods. As noted, various penalties have been or are being studied, including penalties that invoke sparseness in the regression vector (wavelength selection). New approaches are being proposed to perform model updating with only unlabeled data from the secondary conditions (spectra with no reference values).

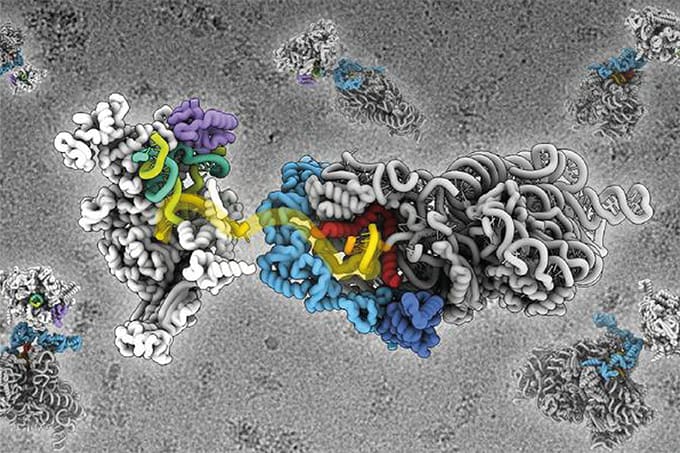

An upcoming issue of the Journal of Chemometrics is devoted to penalty methods, due around May next year. It includes papers on using unique penalties for novel model updating approaches using a variety of spectral situations, greater image resolution for fluorescence microscopy, a tuning parameter independent support vector machine, experimental design and improved sparsity approaches, as well as enhanced 3D spectral data analysis. In my view, penalty methods are the future and will make spectroscopic model updating a reality.