Looking at all the different chromatographic techniques, capillary gas chromatography (CGC) is by far the most mature. Here, we define ‘mature technique’ as a technique that has reached a state of ‘satisfaction’, with a stable but low growth rate. Indeed, over the past decade, few groundbreaking developments have been realized in CGC. Since the invention of the technique by Golay in 1957 (1), most theoretical fundamentals of CGC were described in the 1960s and 1970s. Indeed, few studies have added valuable extensions to that fundamental work; exceptions include the papers by Blumberg and Klee (2), (3), who introduced novel and practical concepts, such as speed flow rates and optimal heating rates in CGC. Notably, this work has hardly been applied in practice for optimizing GC separations! Does this reflect that knowledge (and know-how) has been decreasing over recent years?

One of the most important milestones in achieving the ‘mature’ state of CGC was the invention of fused silica capillary columns (4), which opened the way to produce columns in a very reproducible way, with efficiencies reaching the theoretical maxima. High quality, inert, temperature stable columns in various dimensions and coated with a range of stationary phases are presently available from different vendors. However, only a limited number of CGC column technology innovations have been applied in practice in recent years – partly because CGC is increasingly combined with mass spectrometry (MS), which provides an additional level of specificity that reduces the need for other stationary phase selectivities. The introduction of ionic liquids as stationary phases for CGC is an illustration of a notable exception. In the field of sample introduction, often considered the Achilles’ heel of CGC, most fundamental work was performed in the 1980s and 1990s. Theoretical and practical aspects of split, splitless and cool on-column injection have been studied and described by Grob (5),(6) and many others, while programmed temperature vaporization (PTV) injection was pioneered by Vogt, Poy and Schomburg (7). With the exception of some developments in gas or pressurized liquid injection and hyphenation of different sample preparation techniques (thermal desorption, dynamic headspace, derivatization, and many others) to CGC, hardly any research is presently devoted to further development and performance evaluation of inlet systems. Even at the level of detection, only marginal improvements and evolutions seems to take place, with the exception of the giant leap in performance between mass spectrometers (low and high resolution, MS and MS/MS, hard and soft ionization, and so on). Spectroscopic detectors receiving new attention in recent years, such as VUV and FTIR, have some applicability for specific problems, but will they ever be as universally applied as the well-known ‘old’ detectors?

In contrast to the above, one might get the impression that multidimensional CGC and, in particular, comprehensive gas chromatography (GC×GC), is the only field of current CGC development performed by academia and research groups. Working at RIC, an analytical laboratory providing analytical solutions to the industry, to institutions, and to other laboratories using CGC-MS in various application areas, we are more and more convinced that application of GC×GC is often unrealistic and unproductive for a large number of applications; for example, the routine analysis of pesticides in food and beverages. All too often, GC×GC is used for hype rather than for need! At the same time, we are also concerned about the lack of optimization, validation, and critical evaluation of CGC methods under development or being published. We have the impression that the quality of recent scientific publications in the field of CGC is quite variable. Such observations put pressure on CGC as a mature technique of great value...

The State-of-the-Art: Controversial Publication

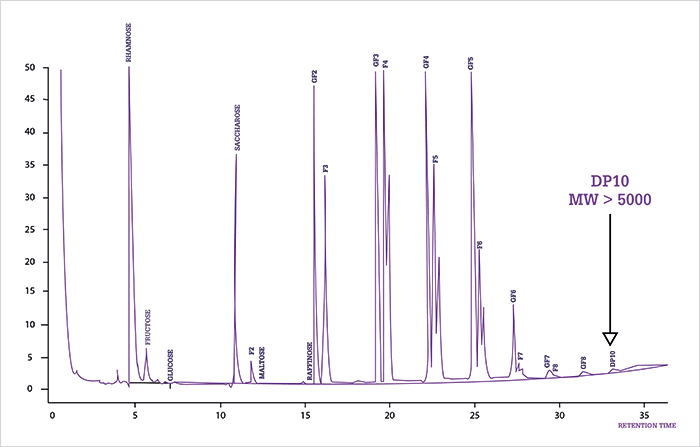

A typical example of the pressure on CGC was exemplified by the controversial paper entitled “Thermal degradation of small molecules: a global metabolomic investigation” (8). The paper questions the correctness of data produced by GC-MS in life sciences. The authors claimed “[...] a significant amount of spectra data generated in GC-MS experiments may correspond to thermal degradation products.” Consequently, the paper formed the basis for ‘heated’ disputes (9) and comments (10). One could easily argue that the applied experimental design using off-line heating of standards and biological samples, followed by LC-ESI-MS analysis, can in no way simulate what happens in a CGC column and system. Moreover, using LC-MS under aqueous conditions to analyze silylated samples, dedicated to CGC analysis, is not common practice – or done at all, which led to a striking statement of the paper: “[...] the productive effect of derivatization was not found to be significant.” The above claim – and others in the paper – can be simply counteracted by looking into the performance of CGC over the past few decades. A good example is the routine quantitative analysis of steroids in medical laboratories – a foolproof method, based on derivatization by oximation and silylation followed by GC analysis and direct injection, was published in 1975 (11). A more recent example is an oligosaccharide analysis that is routinely performed by CGC in quality control in food laboratories. As illustrated in Figure 1, using oximation and silylation, an appropriate sample introduction method (cool on-column) and high-temperature CGC analysis, fructose oligomers can be determined quantitatively up to decamers, representing molecular weights close to 5000 dalton. These successful routine applications are not considered as ‘innovative’ or ‘novel’ and don’t make it into scientific papers. They do, however, prove the power and maturity of CGC.

In our opinion, the major – in fact, only – value of the paper is that it represents a wake-up call for the GC community (and for the chromatographic community in general). Indeed, since most centers of GC expertise from the 20th century have re-oriented or been absorbed into other faculties or institutions, there is a slow but important loss of knowledge on the fundamentals of GC – and also an attrition of know-how. Young scientists entering the field can no longer rely on in-house expertise and, in their quest for rapid publication, they often focus exclusively on recent (review) papers, missing out on groundbreaking fundamental work. Consequently, attention is often focused on applying ‘hyped’ developments without a critical appraisal of important aspects, such as what injector liner to use and what mode of operation will obtain decomposition-less profiles. With this in mind, we would like to cite Koni Grob from a presentation given in 2000 (12): “Splitless injection [...] is one of the major sources of error in trace analysis by GC and not sufficiently well controlled. Losses through the septum purge outlet, for instance, often exceed 30 percent (overloading of the vaporizing chamber), and frequently less than 60 percent of the sample is transferred into the column. Some of the injectors on the market are simply inadequate. Should it be performed by a short or a long syringe needle, with an empty or a packed liner of 2 or 4 mm i.d., with a fast or a slow autosampler? Methods just state ‘2 µl splitless injection’, not even specifying whether or not this includes the volume eluted from the syringe needle, probably because no clear working rules have been elaborated.”

GC×GC Hype

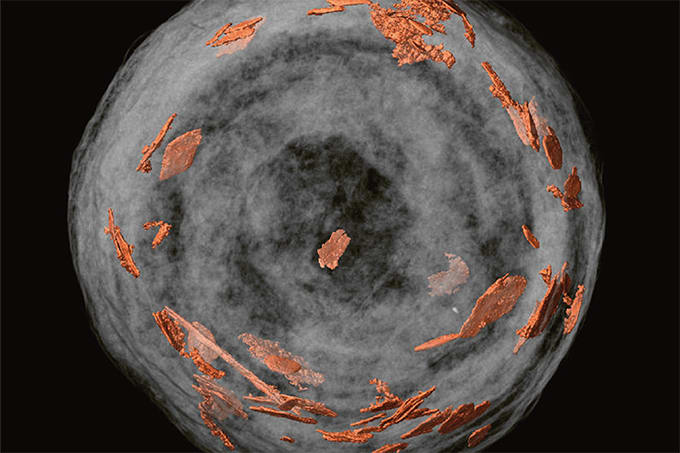

A typical example of what we perceive as ‘hype’ in analytical chemistry is the stampede towards GC×GC. The following statements made by some protagonists of GC×GC are worth mentioning: “Nearly 90 percent of the published studies utilized GC×GC, while only 10 percent used heart-cutting 2D-GC. It is hard to tell if this large discrepancy is because the maturity of heart-cutting 2D-GC makes such studies less ‘publishable’ or because users find GC×GC to be a more effective method for analyzing complex samples (13),” and “although GC×GC is a great multidimensional approach and has gained a lot of popularity, it is also true that in much published research, classical MDGC would have probably provided a better analytical result (14).” Our experiences are fully in line with these statements. GC×GC can indeed perform an excellent job in ‘sample imaging’ for profiling and comparing complex samples, or in petrochemical group type separation, for instance. However, most GC×GC applications published over the last few decades are not really optimized (column selection, flow conditions, modulation, temperature programming, and so on), which results in overall performance much lower than what can be achieved. Moreover, the GC×GC literature is overwhelmed with applications that concentrate on the beauty of the contour plot rather than on producing useful data! Perhaps more worryingly, substantial information on sample preparation and sample injection – both of utmost importance when it comes to correctness and reproducibility of data – is not provided. Looking at hundreds of papers and presentations given at international meetings in recent years, it seems strange that these problems are non-existent when GC×GC is used. And though while all GC work should ultimately lead to quantitative data, this too appears to be a minor issue when applying GC×GC. Are colorful plots really more important than meaningful quantitative data? Apparently so. It is high time that the proponents of the technique proved its real performance by publishing validated studies, analysis data of certified reference samples, results of round robin tests, and so on. Our criticism does not at all mean that we do not appreciate the power of GC×GC for a number of important of applications, such as characterization of petroleum products or non-targeted analysis in metabolomics studies. A recent publication clearly demonstrates that optimized GC×GC can indeed deliver its theoretical potential, namely increasing the peak capacity in a single run by an order of magnitude (15). However, to make the shift from R&D to QA/QC, for example, for targeted analyses, performance should still be proved by developing standard operating procedures that can be validated by analysis of certified samples. It is our belief that for targeted analysis, it will be difficult to beat the performance of state-of-the-art 1D and 2D in the heart-cutting mode in combination with MS/MS detection!

So, What’s Next?

Many years ago at one of the Riva meetings, Koni Grob stated, “in a field such as CGC, there is no standstill, there is either progress or degradation (12)” – see Figure 2. Is it not time that we take that statement seriously once again? There is definitely a need for more fundamental training, including hands-on, in CGC. CGC is far from a black box technique and will, hopefully, never be so! New GC methodology and applications should be optimized according to the fundamentals described in key papers and results should be critically evaluated and correctly compared with existing technology before publication. Solutions should be fully validated in terms of accuracy, reproducibility and robustness. On that aspect, the controversial paper on thermal degradation of solutes in metabolomics studies can be considered an interesting contribution, given that it questions many aspects of routine CGC. It is therefore the task of researchers applying CGC to evaluate their data in a critical manner before submitting it for publication. In addition, journals should also adapt their requirements for ‘novelty’ and give quality and scientific correctness the highest priority. From our experience in GC – and looking into some recent papers that do contain innovative ideas – we believe that CGC, including 2D-GC (heart-cutting and GC×GC), can indeed further grow at a steady pace, guaranteeing high quality, accuracy and robustness, and making the technique even more valuable in many application fields. New CGC equipment, incorporating chip technology, new column formats, new column connections and flow chips, and so on, will help new GC practitioners set up optimized and robust GC methods. The recent introduction of the Agilent Intuvo 9000 GC already incorporates some of that technology. In the next decade, we expect that several evolutions will take place in CGC technology, making the technique more user friendly and rugged. In combination with new developments in sample preparation placed on-line with the CGC instrumentation, we will undoubtedly see a further expansion of GC as a powerful analytical tool for quality control in many application areas, including the life sciences, where it can prove its complementarity to LC-MS.Frank David is R&D Director Chemical Analysis, Koen Sandra is Scientific Director, and Pat Sandra is President, Research Institute for Chromatography, Kortrijk, Belgium (www.richrom.com).

Acknowledgement: Dirk Joye of Tiense Suikerraffinaderij, Tienen, Belgium is thanked for providing the HTGC chromatogram of oligofructose.

References

- MJE Golay, in Gas Chromatography, DH Desty (Ed), Academic Press, New York, 1958, pp 36–55. LM Blumberg, MS Klee, J Microcol, 12, 508–514 (2000). MS Klee, LM Blumberg, J Chrom Sci 40, 234–247 (2002). RD Dandeneau, EH Zerenner, J High Resolut Chromatogr, 2, 351–356 (1979). K Grob, Classical Split and Splitless Injection in Capillary GC, Hüthig Verlag, Heidelberg ISBN 3-7785-1142-4 (1985). Also available as: K Grob, Split and Splitless Injection for Quantitative Gas Chromatography: Concepts, Processes, Practical Guidelines, Sources of Error, 4th, Completely Revised Edition, Wiley ISBN: 978-3-527-61288-8 (2008). K Grob, On-Column Injection in Capillary GC, Hüthig Verlag, Heidelberg ISBN 3-7785-1551-9 (1987). G Schomburg et al., in Sample Introduction in Capillary Gas Chromatography, Volume 1, P. Sandra (Ed), Hüthig Verlag, Heidelberg, Chapters 4, 5 and 6 (1985). M Fang et al., Anal Chem 87, 10935–10941 (2015). S Boman, C&EN 93(42), 25–26 (2015). K Burgess, The Analytical Scientist, 38, 1819 (2016). P Sandra et al., Chromatographia 8, 499¬–502 (1975). K Grob, lecture presented at 23rd ISCC, June 5-10, 2000, Riva del Garda, Italy. JV Seeley, SK Seeley, Anal Chem, 85, 557–578 (2013). PQ Tranchida et al., Analytica Chimica Acta, 716, 66–75 (2012). MS Klee et al., J Chromatogr A, 1383, 151–159 (2015).