What's the latest from your lab?

We are continuing our efforts to develop a practical system for temperature programming of the second-dimension column in GC×GC, which can increase the 2D peak capacity by as much as 50 percent. We have developed a universal system in which a single metal 2D column serves three roles, as separation column, heater, and temperature sensor.

What are the key trends in GC×GC?

After a long period of relative stagnation, several new modulators have been introduced recently. While none of them are truly ground-breaking, they provide the users with more options. Plus, there are numerous interesting applications of GC×GC, as well as further advances in data handling and processing.

What challenges face your field?

The perceived complexity of the technique makes many users shy away from it, not helped by the difficulties with handling and processing huge data files. While GC×GC can produce a lot of data in a short time, extracting useful information from these data is not easy. On top of that, many users spend very little time on optimizing the separation itself. This can only be overcome by education on the one hand, and advanced “big data” techniques (involving artificial intelligence) on the other.

Predictions and aspirations?

It is more a dream than a prediction, but within my lifetime I would like to see GC×GC used for the most challenging samples in every gas chromatographic laboratory. As for my research, the goal has always been to provide the users with better tools to perform GC×GC separations, and I plan to carry on doing this until I retire.

What’s the latest from your lab?

Our focus is applying GC×GC in the forensic sciences. We are working on demonstrating chromatographic parameters related to calibration and peak quality when converting a

traditional gas chromatography-quadrupole mass spectrometry (GC-qMS) instrument into a comprehensive two-dimensional gas chromatography-quadrupole mass spectrometry/flame ionization detection (GC×GC-qMS/FID) instrument. This is accomplished via retrofitting with a reverse fill/flush modulator and coupling the two detectors to get the best selectivity and quantification. One of the major obstacles for forensic laboratories in implementing multidimensional approaches is the lack of quality assurance parameters. By retrofitting commonly used instruments, we hope to provide benchmark data to help laboratories justify the adoption of GC×GC. This is of the utmost important in the forensic sciences, as calibration data and quality assurance are critical components of demonstrating valid science in a courtroom.

What are the key trends in GC×GC?

Many industries are now starting to implement GC×GC as a core component of their workflow, sparking debate in the community regarding areas for improvement. Key topics discussed in Texas this year were the need for uniform reporting in publications, more published full validation studies, and tools for understanding the complexity of batch data. There was a constructive dialogue between industry professionals, academic researchers, and vendors that I believe will have a positive impact moving forward.

Flow modulation – both reverse fill/flush (RFF) and diverting flow modulation – seems to be gaining ground compared with the thermal modulation seen in most published studies to date. The emergence of commercial tools for flow modulation will open new doors for the field of GC×GC; this will be interesting to observe in the coming years.

What challenges face your field?

High-quality GC×GC separations can reveal a wealth of data that was previously “hidden”. We have moved from single sample analysis studies to studies that involve large batches, longitudinal analysis, multiple channel detection, and so on. This complexity in study design leaves us wading through mountains of data, trying to extract meaning. A discussion group held during the GC×GC symposium highlighted the wide range of data treatment strategies currently used, ranging from commercial software tools to a variety of in-house custom software. I believe we need to make data handling and interpretation simpler and more meaningful, but maintain high analytical quality and valid statistical foundations – a tricky path to navigate.

Predictions and aspirations?

My goal has always been to rely on strong foundations of chromatography but bring an “end user” flair to the way we think about GC×GC. We can do the best chromatography in the world, but if GC×GC isn’t adopted for the applications it can benefit most, we have not done our job. Our next phase of research will provide data on hardware tools, statistical interpretation techniques, and communication strategies to allow the adoption of GC×GC in crime laboratories. I hope to see forensic investigations put the wealth of information that GC×GC can provide to good use in investigating homicides, missing persons cases, and mass disasters. To learn more about GC×GC and how it could benefit your laboratory, join me at the 11th Multidimensional Chromatography Workshop in Honolulu, Hawaii on January 5-7, 2020. It’s free to register and a great opportunity to network with experts in the field. www.multidimensionalchromatography.com

What’s the latest from your lab?

As our lab continues to grow, two main research directions have emerged: fundamental studies in ultra-high-pressure liquid chromatography (UHPLC) and miniaturization of analytical measurement platforms.

One of our newer projects sits at the interface of these two areas: utilizing portable LC instrumentation for targeted analysis in point-of-care and field settings. As a young graduate student, I always envisioned trying to work with a system like this; based on our initial testing, I think a lot of exciting new developments will be achieved over the coming

months and years.

What are the key trends in UHPLC?

At this year’s ISCC meeting, there were many posters focused on sample preparation, especially utilizing supercritical fluids and solid-phase microextraction. The use of new

detectors was also a prominent topic, especially vacuum ultraviolet detection and catalytic reactor technology coupled to FID for GC and light-emitting diode sources for LC and ion chromatography (IC) separations. In terms of applications, monoclonal antibodies and antibody–drug conjugates remain hot topics for separation scientists, with a lot of work focusing on mapping the glycosylation of these biomolecules.

Throughout the meeting, the close relationship between capillary LC and MS for omics applications was apparent. As part of the capillary LC panel discussion, involving a number of leaders in the field, the need for a better interface between capillary LC columns and MS inlets was identified as a major goal for the next few years.

What challenges face your field?

While commonly employed by researchers and in core facilities, the perception of capillary LC as “less robust” and “difficult to use” by chromatographers will limit its entry into routine analysis. New strategies for integrated chromatographic systems using capillary LC will help overcome these hurdles, but it will still take some time for the solutions to become fully integrated into standard workflows. Capillary LC is a critical separation technique in biomedical research, but is still seen as a “niche tool” by some in industry due to low sales relative to analytical and prep-scale columns.

Across all research areas, experimental reproducibility is a growing issue that plagues the scientific endeavor. Our use of open-source strategies to share all details of new platforms with potential users, along with non-proprietary data formats, is one way to combat this issue in the realm of analytical instrumentation.

Predictions and aspirations?

Many of the hurdles that limit our ability further push the pressure limits of LC are in-depth engineering problems that may require entirely new approaches to how we imagine LC instruments. Thus, I believe that chromatography researchers will shift some of their focus back towards examining unique stationary phase selectivities. While C18 will likely continue to dominate, other reversed-phase column types and HILIC-based methods will find favor in specific application areas.

In my lab, we will continue to refine the ways that current instrumentation can be used to increase the throughput of widely-used methods. We have just started to scratch the surface on integrating single-board computers into low-cost platforms, and hope to make further advances in this area in the coming years. Finally, we are excited by the newfound ability to perform separations with a battery-operated, field-portable LC – my students and I are dreaming of all the different ways we can utilize this new tool!

What’s the latest from your lab?

The lab is involved in the development and use of both heart-cutting and comprehensive 2D chromatography systems (GC, LC and supercritical fluid chromatography) combined with powerful forms of detection. My own research focuses on the use of state-of-the-art GC-MS instrumentation, with particular focus on GC×GC-MS systems. At the moment, I am working on the development of consumable-free forms of modulation, comprising both pneumatic and thermal desorption devices.

What are the key trends in multidimensional chromatography?

Conference presentations on multidimensional chromatography development are gradually being replaced by talks on applications of multidimensional chromatography-MS. Inevitably, instrumentation is becoming smaller, more powerful, automated and stylish, equipped with do-it-all software and leaving little to the imagination and the intervention of the operator.

What challenges face GC×GC?

Although the benefits have been recognized for decades, we have yet to see widespread acceptance of GC×GC, or incorporation in official methods. In my opinion, there are several reasons for this:

- GC×GC is seen as complicated technology, mainly confined to high-tech research laboratories.

- 1D chromatography combined with MS remains a sufficiently powerful approach in many instances.

- High cost of purchase and operation.

- The revolutionary nature of the technology.

Predictions and aspirations?

I hope to develop a unified GC-MS/GC×GC-MS instrument that is easy to use and affordable. I believe the ability to switch between 1D- and 2D-GC in a single instrument would lead to a much wider diffusion of GC×GC.

What’s the latest from your lab?

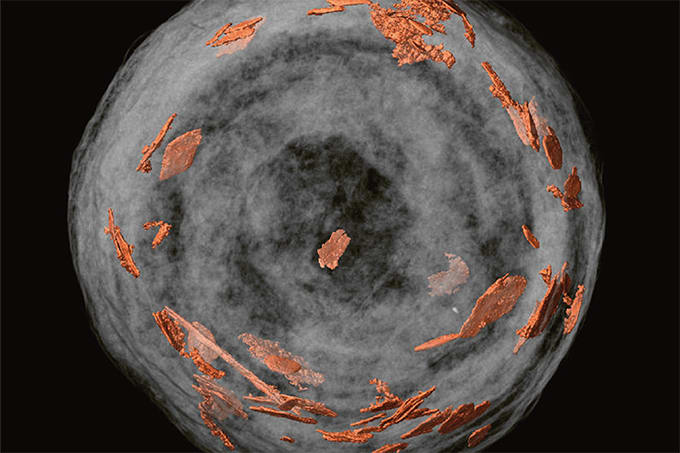

Our lab has always been focused on the development and implementation of comprehensive two-dimensional gas chromatography-high resolution time of flight mass spectrometry (GC×GC-HR-TOF MS) solutions for a range of applications, mostly in the forensic and petrochemical arenas. Over the past few years, we have grown more involved in medical research, specifically in volatilomics. We use a multi-sample and multi-technique approach in combination with convergent data processing tools to take an untargeted view of important medical questions. Right now, we are trying to understand inflammation processes in the lung, using exhaled breath analysis (GC×GC-HR-TOF MS, SIFT-MS and others).

What are the key trends in GC×GC?

For me, data science for processing and method development is the real hot topic. Analytical methods are more and more multidimensional, and the data generated are more and more complex. New tools are needed to help users to get the best out of their analyses. On the hardware front, the general trend is away from expensive cryo-fluids for the modulation process and towards solutions-based flow or cryo-free thermal modulators, which should make GC×GC to more attractive for routine applications.

What challenges face your field?

The next big step for GC×GC is the launch of tailormade integrated solutions. Now that we have robust and user-friendly hardware, we must develop the software to allow

more specialized GC×GC methods. These software solutions will have to integrate hardware control, optimization tools, and data processing in a single package. We need to make the transition from GC to GC×GC simpler and faster.

Predictions and aspirations?

My goal is to continue our work on data processing solutions to merge data from GC×GC and other analytical platforms and obtain true untargeted analysis. I believe that the key role of the analytical chemist is now at the border between chemical analysis and data science. High-quality chemical data will allow us to build robust models using advanced data science tools. This powerful combination is the only way to achieve robust omics screening to answer the big challenges of science.

What’s the latest from your lab?

My lab uses microelectrophoretic separations to look at cellular stress responses in the social amoeba Dictyostelium discoideum. This organism has a unique social lifecycle, in which the normally unicellular organisms aggregate and differentiate into a multicellular superorganism when deprived of nutrients. We use capillary electrophoresis to make quantitative measurements of enzyme activity in the cells during this process. Now, we are transferring our capillary separations to a microchip format, with the goal of making single-cell measurements.

What are the key trends in bioanalytical separations?

Biological systems have great chemical complexity, and as analytical scientists move from the genome to the proteome to the metabolome the complexity only increases. Fred Regnier’s keynote address at ISCC was a great example of how separations can help us to capture the full range of biomolecular information. Glycomics and glycoproteomics also featured prominently at ISCC this year, including talks covering MS, CE-MS, labeling, and enzymatic processing, to name just a few. Another trend was highlighted by a great session on new tools, with many talks eschewing complex systems and instead presenting simple, custom solutions for exciting new applications. Vince Remcho’s work on paper devices, Adam Woolley’s work on truly microscale 3D printing, and Jim Grinias’ overview of the history and current state of open-source instrumentation are all great examples of this approach.

What challenges face the field?

A key challenge in analyzing biological samples is their complexity, so maximizing peak capacity, developing multidimensional separations, and improving specificity are all important goals. As biological applications of mass spectrometry grow and mass spectrometers become more powerful and more sensitive, the ability to couple separations to MS is ever more important. Another challenge is working with the “big data” generated by multidimensional separations coupled with MS-MS detection. These data sets often need sophisticated statistical analysis to draw out trends or identify key features that distinguish sample groups. Analytical educators need to make sure that the next generation of separation scientists knows how to select and implement effective data analysis methods from the long list of those available.

Predictions and aspirations?

Working with microfluidics has shown me how a technology can develop from “bleeding edge” science to a mainstream tool, and I think we’ll see the same maturation of many of the new technologies presented at ISCC. When you develop a new tool it’s exciting be the only lab in the world capable of a certain measurement, but if these advances are restricted to specialists, they will never meet their full potential. I hope to continue to develop the robustness and accessibility of new tools until they are available in all sorts of labs.