Why does the analysis of proteins remain so important?

Andrea Gargano: The analysis of proteins is essential for understanding the complexity of the communication that takes place in our bodies. Improving tools for protein analysis has important implications for medical science, where we aim to understand the mechanisms of action of bioactive molecules, find (bio)markers for diseases, and characterize new classes of pharmaceuticals (for example, antibodies). Developments in protein analysis promote research at the boundary between biology and chemistry; namely, biochemistry, system biology and bioengineering. Moreover, recent progress in proteomic research has demonstrated that advanced analytical tools for protein analysis open up new possibilities in fields beyond protein science, such as polymer and biopharmaceutical research. In essence, protein analysis is important because it is an analytical challenge with big implications. Koen Sandra: Proteins have many functions – structural (keratin in hair, collagen in bones, skin), mechanical (myosin/actin in muscle movement), transport (hemoglobin for oxygen transport in blood), defense/immune (antibodies), biochemical reactions (enzymes), hormones (insulin regulated glucose metabolism) – and the list goes on. Amongst many other benefits, analysis of proteins can lead to the discovery of novel drug targets and biomarkers for disease diagnosis, prognosis, and prediction, and is key in the concept of personalized medicine. Proteins themselves are also on the rise as therapeutics; hence, from a biopharma perspective, accurate analysis is essential. Shabaz Mohammed: If it’s not already clear from Koen and Andrea’s answers, I’ll add that a significant number of diseases, including many types of cancer, can be related to the dysfunction of proteins and their interactions. Thus characterizing their structure, function and interactions is of the utmost importance. John Yates III: Proteins are the operational agents of cells. They form structures, they transmit signals, they catalyze reactions to form metabolites, they form protein complexes. If you want to know how cells work, you have to study proteins.How far are we from characterizing the proteome of complex organisms?

AG: We are a long way off – potentially 100,000 proteoforms (90 percent) away from the entire proteome of a complex living organism (including ourselves). However, important results have been achieved with current technology, supporting genomic and transcriptomic results and enabling discoveries in biomarker research. So far, we are just capable of characterizing the tip of the proteo-berg. To better grasp the proteome complexity, we need better analytical as well as chemometric and statistical tools that are capable of refining and rationalizing the large amount of data that we are collecting. SM: The completion of genomes and the massive improvements in the last decade in cell manipulation, protein chemistry, chromatography and mass spectrometry allow one to identify pretty much any protein in a cell. There are still a few exceptions, such as very low copy number proteins or those with ‘difficult’ physicochemical properties (for example, hydrophobicity in the form of transmembrane domains) – and there are some proteins that suffer from both sets of problems, such as olfactory receptors. Given enough time and resources, one can generate evidence of presence for pretty much all the proteins in a particular type of cell. However, it isn’t something that is often performed because the resources and time required to carry out such a feat are huge – and there aren’t really any good reasons to carry out such an experiment. We often limit ourselves to a few days’ mass spec time for an experiment and the depth level achieved (approximately 8-10,000 protein families for human cells) is sufficient for most biological questions. Consequently, the jump to characterizing all the distinct types of cells in an organism, of which humans have hundreds, is a far off dream. JY: I’d say we’re pretty close to being able to identify the presence of all proteins in a mammalian cell. But the difficulty is knowing how many proteins are really there. After all, protein expression varies with conditions. Additionally, the complete proteome will encompass protein isoforms and modified forms for all expressed genes – and that is a pretty large set of proteins.What are the strongest arguments for using 2D-LC in proteomics?

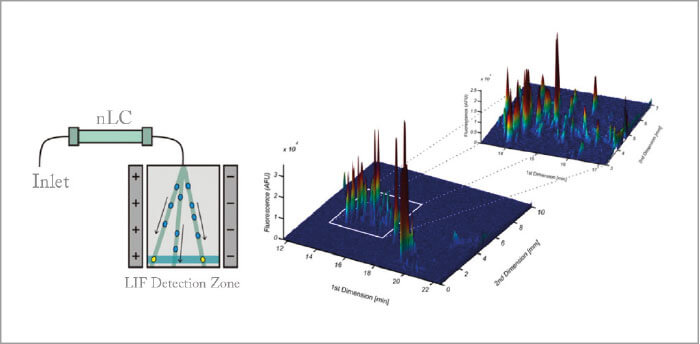

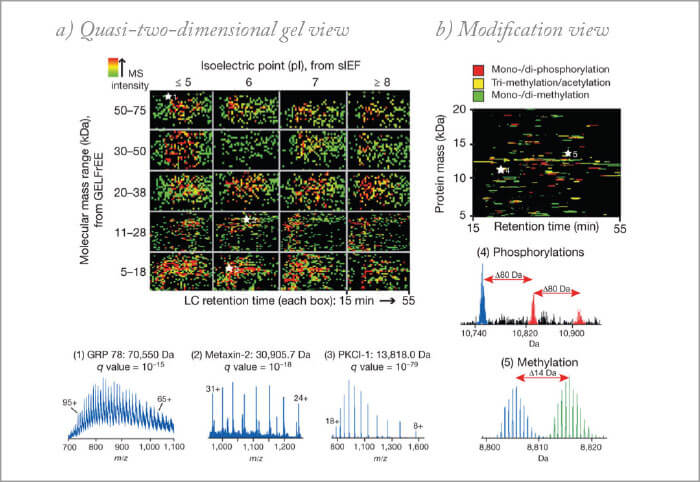

KS: Why has proteomics historically been performed using 2D-PAGE instead of 1D SDS-PAGE? Because one can identify and quantify many more proteins. The same conclusions can be drawn when evolving from 1D to 2D-LC in proteomics. The inability of 1D-LC to adequately resolve real-world mixtures of high complexity is the driving force behind using multidimensional separations. It is no surprise that the field of proteomics is widely adopting the technology given the enormous sample complexity encountered. Simple unicellular systems already contain thousands of proteins, and for now, one can only speculate on the complexity of the clinically valuable and most complex human serum/plasma proteome. Due to the preferred handling of peptides over proteins and the consequent proteolytic digestion of proteins prior to downstream processing, every protein is represented by dozens of peptides. It is not unheard of to be confronted with thousands of peptides, spanning a wide concentration range, that have to be introduced into the mass spectrometer in a way that allows successful qualitative – and quantitative – measurement. Multidimensional LC possesses the additional resolving power to substantially reduce the complexity of such peptide mixtures and, therefore, to increase the number of measurable peptides, to widen the overall dynamic range and consequently to increase proteome coverage. In addition, the implementation of an extra separation dimension has also been shown useful in the targeted analysis of proteins. In contrast to the above described discovery proteomics approach, targeted analysis does not require the analysis of the entire first dimension. AG: State-of-the-art 1D-LC chromatographic setups, using long columns (0.5 to 1 m), packed with materials of small particle size (2 µm) and shallow gradients (from 1 to 6 hrs) can provide peak capacities up to 1500. However, the components of proteomics samples are hundreds of thousands (if not millions) and thus, during LC-MS analysis, mass spectrometry instruments have to deal with complex mixtures of peptides present in vastly different concentrations. Co-elution leads to ion-suppression effects that, together with MS dynamic range limitations, compromise the analysis of low-abundant species and thus limit the depth of proteomic investigations. As Koen notes, online comprehensive two-dimensional liquid chromatography (LC×LC) enables deeper investigations of the proteome because of its higher resolving power. Moreover, LC×LC can combine chromatographic methods with different retention mechanisms (such as ion exchange, hydrophilic interaction LC, or high pH reversed-phase × low pH reversed-phase). As a result, sample components can be separated with orthogonal selectivities. In addition, the fast and efficient second dimension separations enabled by UHPLC technology significantly reduce the peak widths (typically ranging from below 1 to 10 s) enabling higher peak capacities with respect to 1D-LC (more than 1000 in less than 1h). SM: I can echo Koen and Andrea. The first step in most protein characterization experiments involves chopping up proteins into peptides. To comprehensively identify a human proteome, one needs to handle a peptide mixture numbering in the millions that span a dynamic range of 7-9 orders of magnitude. The pace of improvement in 1D LC-MS is astonishing; however, issues remain and there are certain hard limits that haven’t been addressed. The latest mass spectrometers have (successful) sequencing rates of around 10-20 peptides per second and, with state of the art UHPLC systems, one can hope to identify 20-40k peptides in a single analysis. Increasing those numbers won’t be easy since the dynamic range of a mass spectrometer is (at best) five orders and current MS systems can just about sample peptides at the bottom of its restricted dynamic range. Increasing speeds don’t really improve the situation since the mass spectrometers can’t collect sufficient populations of the low abundant peptides for a successful sequencing event because of the limited dynamic range. It’s also been predicted that the resolving power of current UHPLC systems improvements would plateau at around double current values. Thus, the only way to increase capacity is to fractionate and the most powerful and sensitive (by far) method of fractionation is an additional round of chromatography. JY: To get the complete mammalian cell proteome as described above will require a tremendous peak capacity and it is unclear if 1D LC can deliver that level of performance. Moreover, developments occurring in ultra-high resolution 1D LC, of course, can be adapted for 2D LC.Andrea Gargano is a post-doctorate researcher at the University of Amsterdam, specializing in two-dimensional liquid chromatography. In the summer of 2015, Gargano was awarded a Veni grant from the Netherlands Organization for Scientific Research (NWO) and he is currently working on the development of (multi-dimensional) separation strategies for the characterization of intact proteins.

Koen Sandra is Scientific Director at the Research Institute for Chromatography (RIC), which provides world-class chromatographic and mass spectrometric support to the chemical, life sciences and (bio)pharmaceutical industries. As a non-academic scientist, Sandra is author of over 40 highly-cited scientific papers and holder of several patents related to analytical developments in the life sciences area.

Shabaz Mohammed, after finishing his PhD in mass spectrometry, moved to Denmark to work with Ole Jensen in the field of proteomics and, in particular, the development of techniques for improving protein information. In 2008, Mohammed became group leader and Assistant Professor in Utrecht and worked with Albert Heck at the Netherlands Proteomics Centre. In 2013, he moved to the University of Oxford where is now Associate Professor.

John Yates III is Ernest W. Hahn Professor of Chemical Physiology and Molecular and Cellular Neurobiology at The Scripps Research Institute, LaJolla, California, USA. Yates was recently named Editor of the Journal of Proteome Research.

Where has 2D-LC had the largest impact?

SM: There are a significant number of examples demonstrating the power of multidimensional chromatography and it’s quite difficult to highlight a particular example. There are entire fields that depend upon it. Characterizing how signals are transported through a cell often requires an understanding of the behavior of proteins being phosphorylated. The presence and absence of this small molecule on proteins can determine if a protein is active, where it is in a cell, and with what it interacts. Phosphorylated proteins are low in abundance (occupying the lower levels of protein dynamic range) and such events are thought to number in the hundreds of thousands if not millions in a cell at any time. Identifying these events and their meaning often leads to multiple rounds of chromatography for enrichment and complexity reduction. AG: Offline 2D-LC has been widely used as a pre-fractionation strategy (prior or after protein digestion), to reduce the complexity of single shotgun RPLC-MS analysis. However, the long times required and big advancements in UHPLC-HRMS limited the spread of such workflows. An area where 2D-LC will continue to expand its impact is the selective analysis of part of the proteome, enriching certain protein or peptide species using affinity tags and/or special sorbents. KS: I have worked at a molecular diagnostics company for several years, where we were applying proteomics to discover and verify disease biomarkers. Using 2D-LC, we could mine the otherwise hidden proteome (hidden biomarker candidates), which came in particularly handy in the discovery programs. 2D-LC, yet in another format (targeted), was also successfully implemented in our biomarker verification workflows. Now that my focus has in recent years shifted more to biopharmaceutical analysis, 2D-LC based proteomics technologies again come in very handy to identify and quantify host cell proteins (HCPs). While in a 1D chromatographic set-up, the separation space is dominated by peptides derived from the therapeutic protein, the increased peak capacity governed by 2D-LC allows one to look substantially beyond the therapeutic peptides and detect HCPs at low levels (sub ppm relative to the therapeutic). We have also used 2D-LC in the quantification of therapeutic proteins in blood plasma to support (pre-)clinical development (pharmacokinetic studies), which is technically identical to biomarker verification. We have even validated these methods according to EMA guidelines. In these projects, the first dimension is used to reduce the matrix complexity prior to second dimension LC-MS analysis using multiple reaction monitoring. Because of matrix effects associated with 1D-LC-MS, one only obtains sensitivities in the high ng/mL range. Incorporating that extra dimension allows one to reach the low and even sub ng/mL levels in blood plasma/serum.

Are there any shortcomings to the technique?

SM: Coupling multiple rounds of chromatography is still not a trivial task. Multidimensional chromatography is still, mostly, used by committed analytical chemists. Sensitivity is of paramount importance in proteomic experiments. HPLC columns often lead to unacceptable losses unless attention is paid to how the sample is brought to each chromatographic system and how it is treated after fractionation. Miniaturization plays a huge role in proteomics and it is often not straightforward to reduce dimensions and flow rates while increasing column pressures. A significant amount of know-how about the various flavors of chromatography and the underlying science is required to pick and build multidimensional systems for certain types of proteomic experiments. That said, for unmodified ‘regular’ proteins, a consensus is being reached and so certain two-dimensional configurations have now hit mainstream science. AG: The major drawbacks of comprehensive 2D-LC (LC×LC) are: long analysis time (typically several hours), increased dilution (thus reduced sensitivity) in comparison with 1D-LC, and the complexity of method optimization. In analytical-scale separations, the introduction of systems with reduced dispersion volumes and UHPLC technology has drastically reduced the analysis time of LC×LC, enabling second dimension separations of about 20 seconds for a full gradient elution run. This is not yet the case for nano 2D-LC setups that are used for sample-limited applications, such as proteomic research. Here, due to dead and dwell volumes, the speed of the 2D cycle is typically limited to longer cycles (more than 10 min). In the coming years, further advancement in the miniaturization of LC apparatus (e.g. chip-based chromatography) will enable faster 2D separation cycles and thus facilitate the development of faster LCxLC applications. Several groups are working on the reduction of dilution in LC×LC and promising results are coming from the use of trap cartridges to collect fractions from the first dimension (what we call “active modulation”) and inject small volumes in narrow second dimension columns. Method optimization remains a challenge. However, software solutions to reduce the time and effort required to optimize two-dimensional methods will help analytical scientists in this task. KS: In addition to the technical challenges already mentioned, I believe one of the shortcomings is related to nomenclature. All kinds of different terms are being used to describe the way 2D-LC separations are performed (comprehensive, heart-cutting, LCxLC, LC-LC, off-line, on-line, automated off-line, and so on). In some ways, this is not surprising given that the technology has been developed from two different angles (proteomics and chromatography, respectively). In multidimensional GC, there are no ambiguities around nomenclature/terminology. People often contact us to ask which kind of 2D-LC they are actually performing. Importantly, depending on how 2D-LC is performed, one is confronted with flow and mobile phase incompatibilities, reproducibility issues, immature data analysis software, and so on. In the early days of using 2D-LC in proteomics, repeatability and reproducibility were not considered to be important issues. Now that the technology is really being applied to solve problems, old figures of merit have become primordial. JY: The biggest shortcoming is the time required to perform the analysis, which limits the number of experiments that can be performed. Let’s hope higher throughput methods for 2D-LC can be developed.